Wednesday, July 30, 2008

Other recent blog posts

Andy Patrick has a series of useful "efficient development" posts regarding speeding up and making game development easier. Check it out:

Efficient Development, Part I

Efficient Development, Part II

Efficient Development, Part III

Efficient Development, Part IV

Next up there were a couple of articles on Gamasutra related to 2D fluid dynamics that are also pretty interesting.

Fluid Dynamics, Part I

Fluid Dynamics, Part II

Christer Ericson has an interesting post on using cellular automata for path finding. And one of the guys over at XNAInfo already has a working XNA demo implementing the idea.

Path finding with cellular automata

Game of Life on the GPU

Got any interesting links? Post 'em in the comments!

Friday, July 18, 2008

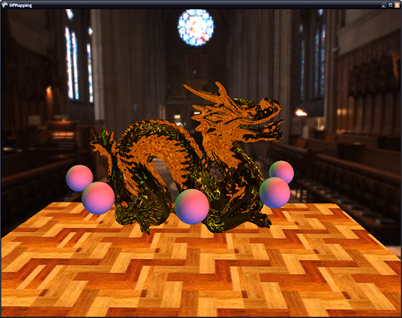

Dual-Paraboloid Variance Shadow Mapping

Edit: Added the video that I recently made

I have to say, I really like variance shadow mapping. It's such a simple(ingenious) technique to implement, but it provides such nice looking results. I haven't had the need to implement the technique before, but I'm glad I did. Last post we implemented dual-paraboloid shadow mapping. And those of you with a PS 3.0 graphics card were able to have semi-soft shadows with percentage closer filtering. But now when we get rid of the PCF filter, and replace it with variance shadow mapping, we can fit all the code inside the PS 2.0 standard. Anyway, on to the code.

Variance Shadow Mapping Paper + Demo

Building the shadow maps:

Variance shadow mapping is really simple to implement. First thing we need to change is to create either a RG32F or RG16F surface format for our front and rear shadow maps (instead of R32F/R16F). This allows us to store the depth of the pixel in the red channel and the squared depth of the pixel in the green channel. So our new pixel shader for building the depth/shadow maps is this:

return float4(z, z * z, 0, 0, 1);

Blurring the shadow maps:

Variance shadow mapping improves upon standard shadow mapping by storing a distribution of depths at each pixel (z * z, and z) instead of the single depth (as with standard shadow mapping). And because it stores a distribution of depth, we can blur the shadow maps. This would produce some funky/incorrect results if we were just doing standard shadow mapping with a PCF filter.

So, after we have created our depth maps, we will blur them with a separable Gaussian blur. This will perform two passes on each shadow map; the first will perform a horizontal blur and the second will perform a vertical blur. There is a wealth of information on the internet on how to do this so I won't explicitly cover this. Here's what our front shadow map looks like after being blurred:

Variance shadow mapping:

We build our texture coordinates exactly the same as the previous method of shadow mapping. But the depth comparison is a little different. You can refer to the VSM paper for an in-depth discussion, but here is the gist of it. Since we filtered our shadow maps with a Gaussian blur, we need to recover the moments over that filter region. The moments are simple the depth and squared depth we stored in the texture. From these we can build the mean depth and the variance at the pixel. And as such the variance can be interpreted as a quantitative measure of the width of a distribution (Donelly/Lauritzen). This measure places a bound on the distribution and can be represented by Chebychev's inequality.

float depth;

float mydepth;

float2 moments;

if(alpha >= 0.5f)

{

moments = tex2D(ShadowFrontS, P0.xy).xy;

depth = moments.x;

mydepth = P0.z;

}

else

{

moments = tex2D(ShadowBackS, P1.xy).xy;

depth = moments.x;

mydepth = P1.z;

}

float lit_factor = (mydepth <= moments[0]);

float E_x2 = moments.y;

float Ex_2 = moments.x * moments.x;

float variance = min(max(E_x2 - Ex_2, 0.0) + SHADOW_EPSILON, 1.0);

float m_d = (moments.x - mydepth);

float p = variance / (variance + m_d * m_d); //Chebychev's inequality

texColor.xyz *= max(lit_factor, p + .2f); //lighten the shadow just a bit (with the + .2f)

return texColor;

5x5 Guassian Blur

9x9 Guassian Blur

And there you go. Nice looking dual-paraboloid soft shadows thanks to variance shadow mapping.

As before, your card needs to support either RG16F or RG32F formats (sorry again Charles :) ). You can refer to the VSM paper and demo on how to map 2 floats to a single ARGB32 pixel if your card doesn't support the floating point surface formats.

Thursday, July 17, 2008

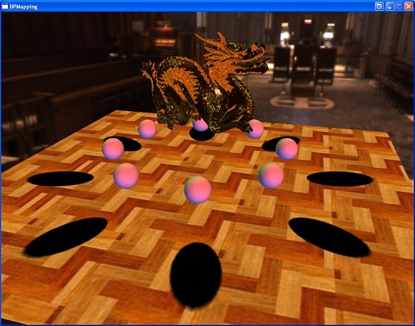

Dual-Paraboloid Shadow Maps

Last time I introduced using dual-paraboloid environment mapping for reflections. Well now we're going to apply the same process to shadows. So if you haven't looked at my previous post, read it over before going on.

Creating the depth/shadow maps is exactly the same as when we created the reflection maps with one exception. Instead of outputting color in the pixel shader, we output the depth of the 3d pixel, like so:

return depth.x / depth.y;

Where depth.x is the depth of the pixel and depth.y is the w component. And here is the resulting depth/shadow map for the front hemisphere.

Now, to map the shadows the process is also very similar to how we generated the reflections. We follow a similar process in the pixel shader:

- Generate the texture coordinates for the front and rear paraboloids

- Generate the depth of the pixel

- Test to see if the pixel is in shadow

We generate the texture coordinates exactly as when we generated the reflection texture coordinates. To generate the depth of the pixel we take the length of the vector from the vertex to the origin of the paraboloid (0, 0, 0) and divide by the light attenuation. Also to check which hemisphere we are in, we calculate an alpha that is the Z value of the transformed vertex and offset by .5f;

float L = length(pos);

float3 P0 = pos / L;

float alpha = .5f + pos.z / LightAttenuation;

//generate texture coords for the front hemisphere

P0.z = P0.z + 1;

P0.x = P0.x / P0.z;

P0.y = P0.y / P0.z;

P0.z = L / LightAttenuation;

P0.x = .5f * P0.x + .5f;

P0.y = -.5f * P0.y + .5f;

float3 P1 = pos / L;

//generate texture coords for the rear hemisphere

P1.z = 1 - P1.z;

P1.x = P1.x / P1.z;

P1.y = P1.y / P1.z;

P1.z = L / LightAttenuation;

P1.x = .5f * P1.x + .5f;

P1.y = -.5f * P1.y + .5f;

Now that we have generated our texture coordinates we need to test the depth of the pixel against the depth in the shadow map. To do this we index either the front or rear shadow map with the texture coordinates we generated to get the depth and compare this to our depth. If the depth is less than our depth, then the pixel is in shadow.

float depth;

float mydepth;

if(alpha >= 0.5f)

{

depth = tex2D(ShadowFrontS, P0.xy).x;

mydepth = P0.z;

}

else

{

depth = tex2D(ShadowBackS, P1.xy).x;

mydepth = P1.z;

}

//lighten the shadow just a bit so it isn't completely black

if((depth + SHADOW_EPSILON) < mydepth)

texColor.xyz *= 0.3f;

return texColor;

And that's it. Now we have dual-paraboloid shadow mapping. If you have a pixel shader 3.0 graphics card, then the shadow also has a percentage closer filter applied to it. You also may notice seams in the shadows. This is because the splitting plane of the paraboloids is the x-axis (since the paraboloids look down the +/- z-axis). This is one of the problems of using paraboloid mapping for shadows. One has to be careful where they place the split plane to avoid this situation. Pixels that are in the center of either hemisphere suffer little distortion. But this is just a tutorial so I didn't worry too much about it.

Also you're graphics card must be able to support R32F or R16F surface formats to run the demo out of the box (sorry Charles ;) ). Otherwise, you must use the ARGB32 format and pack the depth values in all 4 channels. Here is some code to pack/unpack to/from an ARGB32 surface format. You pass the depth value to the pack method when you render to the shadow maps, and you pass the float4 color to the unpack method when you fetch from the shadow maps. I decided not to implement this so the code wouldn't become complicated by something that doesn't add to the tutorial.

//pack the depth in a 32-bit rgba color

float4 mapDepthToARGB32(const float value)

{

const float4 bitSh = float4(256.0 * 256.0 * 256.0, 256.0 * 256.0, 256.0, 1.0);

const float4 mask = float4(0.0, 1.0 / 256.0, 1.0 / 256.0, 1.0 / 256.0);

float4 res = frac(value * bitSh);

res -= res.xxyz * mask;

return res;

}

//unpack the depth from a 32-bit rgba color

float getDepthFromARGB32(const float4 value)

{

const float4 bitSh = float4(1.0 / (256.0 * 256.0 * 256.0), 1.0 / (256.0 * 256.0), 1.0 / 256.0, 1.0);

return(dot(value, bitSh));

}

Next time I'll introduce using variance shadow mapping with our dual-paraboloid shadow mapping to give nice soft shadows that we can still use with pixel shader 2.0 cards.

Wednesday, July 16, 2008

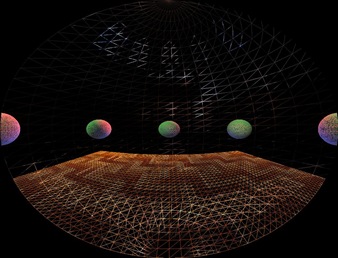

Dual-Paraboloid Reflections

I recently had to investigate dual-paraboloid reflections at work for an unnamed console. What are these you ask? Great question! :) Lets start with some background.

The standard way of calculating reflections is to use an environment map, more specifically a cube map. In my last tutorial on reflections, this basic type of reflection mapping was used to compare against billboard impostor reflections. Now, cubemaps are great for static scenes, and are relatively low cost to perform a texture fetch on in a shader. However if you have a dynamic scene you have to update all 6 sides of the cubemap (this is not technically true, aggressive culling and other optimizations can guarantee at most 5 sides). Holy crap, now we have to render our scene 6 times!

This is where dual-paraboloid reflections come in. They are a view-independent method of rendering reflections just like cubemaps. Except you only have to update 2 textures, not 6! The downside is that you are going to lose quality for speed, but unless you have to have high-quality reflections, paraboloid reflections will probably provide sufficient results.

Reference articles:

View-Independent Environment Maps

Shadow Mapping for Hemispherical and Omnidirectional Light Sources

Dual Paraboloid Mapping in the Vertex Shader

In the interest of keeping this post from getting too long, I won't go into great detail on the mathematical process. I suggest you refer to the first and third papers for an in-depth discussion on the details.

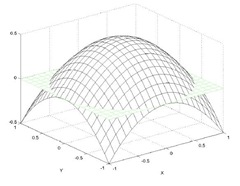

Now lets move on to what exactly paraboloid mapping is. Lets look at what a paraboloid is.

The basic idea is that for any origin O, we can divide the scene into two hemispheres, front and rear. For each of these hemispheres there exists a paraboloid surface that will focus any ray traveling in the direction of O into the direction of the the hemisphere. Here is a 2d picture demonstrating the idea:

A brief math overview:

What we need to find is the intersection point where the incident ray intersects the paraboloid surface. To do this we need to know the incident ray and the reflected ray. Now because the paraboloid reflects rays in the same direction, it is easy to compute the reflection vector: it's the forward direction of the hemisphere! So the front hemisphere's reflection vector will always be <0, 0, 1> and the rear hemisphere's reflection vector will always be <0, 0, -1>. Easy! And the incident ray is calculated the same as with environment mapping by reflecting the ray from the pixel position to the eye across the normal of the 3D pixel.

Now all we have to do is find the normal of the intersection which we will use to map our vertices into paraboloid space. To find the normal, we add the incident and reflected vectors and divide the x and y components by the z value.

Generating the Paraboloid maps:

What we are basically going to do is, in the vertex shader, place each vertex ourselves that has been distorted by the paraboloid. First we need to transform the vertex by the view matrix of the paraboloid 'camera'. We don't apply the projection matrix since we're going to place the point ourselves

output.Position = mul(input.Position, WorldViewProj);Next we need to find the vector from the the vertex to the origin of the paraboloid, which is simply:

float L = length( output.Position.xyz );output.Position = output.Position / L;

Now we need to find the x and y coordinates of the point where the incident ray intersects the paraboloid surface.

output.Position.z = output.Position.z + 1;output.Position.x = output.Position.x / output.Position.z;

output.Position.y = output.Position.y / output.Position.z;

Finally we set the z value as the distance from the vertex to the origin of the paraboloid, scaled and biased by the near and far planes of the paraboloid 'camera'.

output.Position.z = (L - NearPlane) / (FarPlane - NearPlane);

output.Position.w = 1;

And the only thing we need to add in the pixel shader is to make sure and clip vertices that are behind the viewpoint using the intrinsic clip() function of HLSL.

Reflections with paraboloid maps:

In the reflection pixel shader we will: generate the reflection vector the same way as cube mapping, generate texture coordinates for both the front and rear paraboloids' textures, and blend the samples taken from the textures.

The texture coordinates are generated exactly as how we generated them before in the generation step. We also scale and bias them to correctly index a D3D texture. And then we take a sample from each map and pick the sample with the greater color value:

// calculate the front paraboloid map texture coordinates

float2 front;

front.x = R.x / (R.z + 1);

front.y = R.y / (R.z + 1);

front.x = .5f * front.x + .5f; //bias and scale to correctly sample a d3d texture

front.y = -.5f * front.y + .5f;

// calculate the back paraboloid map texture coordinates

float2 back;

back.x = R.x / (1 - R.z);

back.y = R.y / (1 - R.z);

back.x = .5f * back.x + .5f; //bias and scale to correctly sample a d3d texture

back.y = -.5f * back.y + .5f;

float4 forward = tex2D( FrontTex, front ); // sample the front paraboloid map

float4 backward = tex2D( BackTex, back ); // sample the back paraboloid map

float4 finalColor = max(forward, backward);

Optimizations:

If you align the paraboloid 'camera' such that it is always facing down the +/- z axis, you don't need to transform the vertices by the view matrix of the camera. You only need to do a simple translation of the vertex by the camera position.

Conclusion:

As you can see, paraboloid maps give pretty good results. The won't give you the quality of cubemaps, but they are faster to update and require less memory. And in the console world, requiring less is almost reason enough to pick this method over cubemaps.

One drawback of paraboloid maps is that the environment geometry has to be sufficiently tessellated or will we will have noticeable artifacts on our reflector. Another drawback is that on spherical objects we will see seems. However with objects that are reasonably complex (such as the Stanford bunny or dragon) and are not simple shapes, the seams will not be as noticeable.

Next time I will present dual-paraboloid mapping for use with real-time omnidirectional shadow mapping of point lights.